Economics Nobel Prize winner Daniel Kahneman’s 2021 book, Noise: A Flaw in Human Judgment, co-written with Olivier Sibony and Cass Sunstein, is about the typically large, and usually undesirable, variability – “noise” – in people’s judgments when evaluating the same information, i.e. that would otherwise be expected to result in the same, or very similar, judgments.

Examples of noisy expert judgments include variability in doctors’ diagnoses of identical patients with the same condition, differences in sentences handed down by judges to people who have committed the same crime, and divergences in professors’ grades for the same students’ work.

Noise is present in all judgments in which decision-makers are required to assess and integrate diverse bits of information to reach a decision, where such decisions can be evaluative or predictive in nature.

According to Kahneman, Sibony and Sunstein: “If there is more than one way to see anything, people will vary in how they see it.”

Other examples of noisy decision-making include human resources applications such as evaluating and ranking job candidates and performance appraisals, social services decisions such as child custody interventions, the awarding of university scholarships and research grants, strategic decision-making, government policy-making, investing, project management, procurement, competition judging, economic forecasting, and so on.

Noise is usually undetected and unexpected

Noise arises because people are different in how they access, integrate and evaluate the information they base their decisions on, which reflects differences in people’s expertise, intelligence, preferences, personality, mood, effort, ‘triggers’, etc.

However, most people are oblivious to the inherent idiosyncraticity and randomness in their judgments and resulting decisions, and so noise usually goes undetected.

When noise is detected, or pointed out to people, it often comes as a shock – especially to people who consider themselves to be ‘experts’! “Wherever there is judgment there is noise, and more of it than you think.”

Potentially dangerous and best avoided

Variability in people’s judgments, reflecting differences in their personal preferences, is to be celebrated in creative or personal circles, such as writing a song or choosing a new coat.

However, in professional settings, such judgmental variability (noise) translates into unpredictable errors that can be dangerous, especially when the outcome is important or critical, e.g. risking patients being misdiagnosed and defendants unfairly sentenced.

Accurate, consistent decision-making is especially desirable for applications that are repeated, such as diagnosing patients, sentencing criminals, assessing students’ work, etc. Doing otherwise – in effect, decision-making that is arbitrary or capricious – is both inefficient (wasteful of resources) and unfair. Decisions should not depend on the ‘good’ or ‘bad’ luck associated with who makes the decision: in effect, turning decision-making into a lottery.

For example, it’s reasonable to expect that when patients present with the same symptoms they should receive very similar, if not the same, diagnoses (preferably, the ‘right’ one!). The notion that a given patient’s diagnosis (and treatment) depends on the lottery of which doctor they see, and it might be different if they’d seen a different doctor, is disturbing.

‘Noise auditing’

Kahneman and his co-authors recommend that decision-makers and organizations conduct ‘noise audits’ to raise awareness of the problem and acknowledge it (so that something can be done about the problem).

A noise audit involves case scenarios containing the same information being presented to decision-makers who are asked to make their judgments individually, e.g. diagnosing patients or sentencing defendants. And then these judgments are compared, in the process gauging their variability.

An example of a noise audit supported by 1000minds from a recent study is discussed below.

‘Decision hygiene’

With the objective of decreasing judgmental noise and increasing ‘decision hygiene’ to arrive at more valid and reliable decision-making Kahneman and his co-authors have several key recommendations:

- Ensure that decision-makers are as knowledgeable and competent in the decision-making application as practically possible.

- Specify explicit criteria and weights representing their relative importance – i.e. ‘algorithms’ or ‘formulas’, e.g. using 1000minds – to be used in support of decision-making.

- When multiple decision-makers are to be involved, have them express their preferences independently – to avoid groupthink, bandwagon effects, etc – and then combine them.

1000minds decision-making

1000minds has been used in an extraordinarily wide range of applications to decrease judgmental ‘noise’ and increase ‘decision hygiene’; examples include prioritizing patients for treatment, crimes for investigation, research questions and grant applications for funding and project management.

Many applications begin with a ‘noise audit’ whereby decision-makers are asked to participate in a 1000minds ranking survey involving simple case studies of real or imaginary alternatives being presented to participants individually to rank using their intuition.

Participants’ rankings are compared to reveal the variability of decision-maker’s intuitive judgments. This variability often points to the need for a better approach based on explicit criteria and weights (as recommended by Kahneman and his co-authors, as discussed earlier) – e.g. using 1000minds! Most decision-makers aren’t aware of, and are usually surprised by, how much they can differ in their judgments and how idiosyncratic they are.

Example of a ‘noise audit’

In an example from the field of disease classification, Mahmoudian et al. (2021) reports on a 1000minds ranking survey involving 34 experts in symptomatic early-stage knee osteoarthritis (OA), a painful and debilitating condition in which cartilage in the knee joint breaks down causing the bones to rub together.

Each expert was presented, in random order, with 20 patient case scenarios and asked to rank them, based on their clinical experience, as to how likely they would classify them as early-stage knee OA patients: from 1st = most likely to 20th = least likely.

The experts comprised 14 orthopedic surgeons, 13 rheumatologists, 2 general practitioners, 2 sports medicine specialist and 3 physical therapists.

The case scenarios included the patients’ clinical signs and symptoms as well as socio-demographic characteristics such as age, gender and social circumstances.

Noise-audit results and decision-hygiene next steps

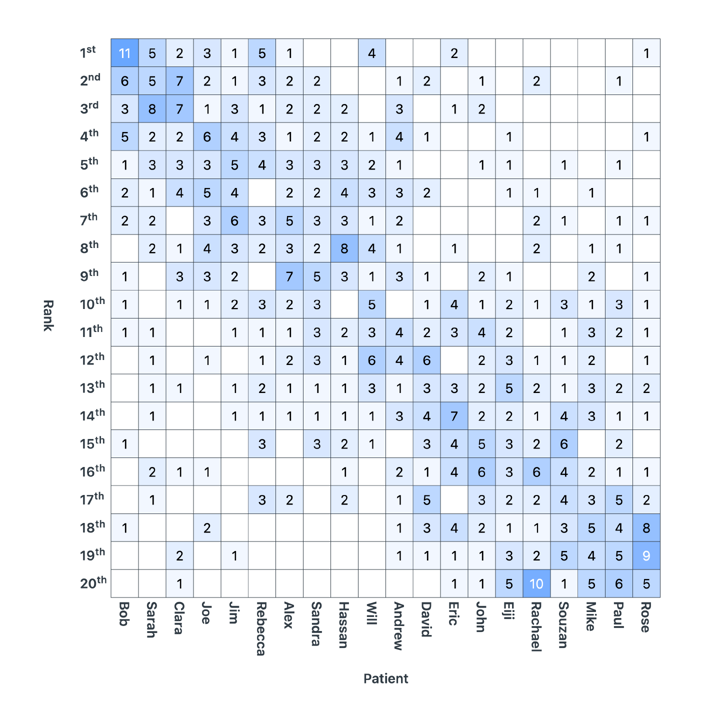

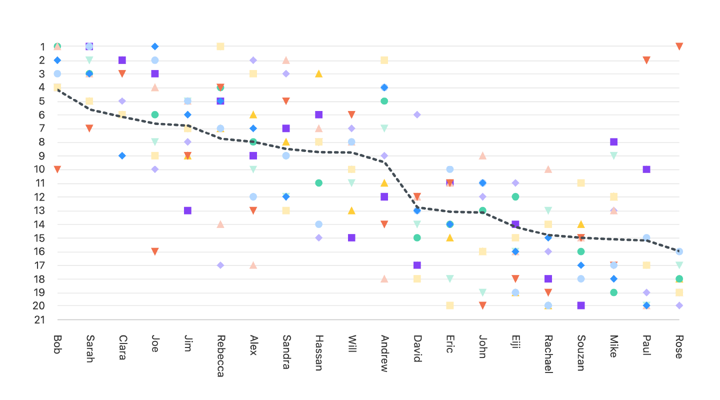

The results from the ranking survey are presented in Table 1 and Figure 1 below.

In the table, the number in each cell is the number of participants who ranked the patient case scenario (horizontal axis) in each rank position (vertical axis).

For example, 11 of the 34 experts ranked ‘Bob’ 1st (i.e. “most likely to have early-stage knee OA”), 5 experts ranked him 2nd, 3 ranked him 3rd, 5 ranked him 4th and 1 ranked him 5th.

As can be seen in both the table and figure, agreement over the rankings for the two most ‘extreme’ patients, ‘Bob’ and ‘Rose’ – on average, 1st and 20th respectively – is higher than for the ‘middle-ranked’ patients.

For example, ‘John’ received an almost full range of rankings, from 2nd to 20th – meaning some experts thought he was very likely to have early-stage knee OA, whereas others thought he was unlikely to.

Overall, the distribution of the experts’ rankings indicates a high degree of disagreement for most cases – in other words, the experts’ judgments are very ‘noisy’.

Based on these results, the authors concluded that what is needed – to reduce the noise and increase decision hygiene – are explicit criteria for classifying patients with early-stage knee OA. They used 1000minds to specify these criteria and determine weights representing their relative importance.

Table 1: Participants' ranks for the 20 patient case scenarios (n=34)

Figure 1: Patients on the x-axis ranked (1-20) by the 34 experts, denoted by colored shapes

References

D Kahneman, O Sibony & O Sunstein (2021), Noise: A Flaw in Human Judgment, Little, Brown Spark.

A Mahmoudian, S Lohmander, M Englund, P Hansen, F Luyten & International Early-stage Knee OA Classification Criteria Expert Panel (2021), abstract, 2021 OARSI Virtual World Congress on Osteoarthritis: OARSI Connect ‘21, “Lack of agreement in experts’ classification of patients with early-stage knee osteoarthritis”, Osteoarthritis and Cartilage 29, S299-S300.

See also

1000minds webinar: Group decision-making – How to de-noise decisions & make consistent judgments

Reviews and interviews about Noise: A Flaw in Human Judgment

In which Daniel Kahneman, Olivier Sibony and others discuss the ideas in the book.

- Shankar Vedantam’s Hidden Brain podcast, “Our Noisy Minds”

- Michael Shermer’s conversation with Daniel Kahneman on YouTube

- Evan Nesterak’s “A Conversation with Daniel Kahneman About ‘Noise’” for Behavioral Scientist

- Caroline Criado Perez’s “Book of the Day” review, “The Price of Poor Judgment”, for The Guardian

- Kathryn Ryan’s interview with Olivier Sibony on RNZ

Have confidence in your decisions

1000minds is proud to help organizations and individuals around the world improve their decision-making processes and discover customer preferences.

The ‘secret spice’ behind our innovative approach is our award-winning, patented PAPRIKA method, which makes the process simple, smart and fun!

Curious about how 1000minds can help you? Sign up for our free trial or book a demo with our friendly team today.

Share this post on: